Posts filed under ‘Software’

Requirements: Make Acceptance Test Cases Too

This post is related to the other part of my professional life’s spectrum: Software engineering. I work as a developer in a client firm, a big software house specialized in this huge system for a particular field of business. Our team is responsible for maintenance and also development of 2-3 versions of the system each year.

Lately our team has had reason to doubt our requirements process. The issue rose after we got the first batch of reports from acceptance testing. We got a number of error reports of new features or changes that we’ve made. What happened was that we were able to shoot all the reports down as the system worked according to the new requirements in every case.

So, happy end, yes? No. From the reports the testers (members of the organizations that use the system) we could figure out that even though the system was implemented according to the requirements, the requirements did not match the testers’ expectations.

Stakeholders of the same organizations that were involved in acceptance testing had been an active part in the requirements specification process as well. So, we figured out that the problem was that we hadn’t been able to elicitate all the information needed to specify the requirements according to the needs of the users.

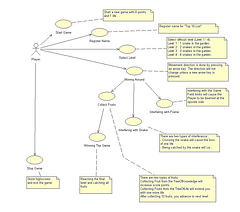

I’ll illustrate our development process a bit to give some context. We use a fairly traditional waterfall-like setup: Decide the scope, specify requirements, design, implement, system test, acceptance test, deliver. For requirements specification we use use cases. This is the methodology our client supports so we use and promote it.

We brainstormed about the weaknesses in our requirements specification process. The most plausible explanation was that the use cases were too complicated. The users could not what effect changing one part of the use case would have on that part of the system as whole.

As a solution we came up with making acceptance test -like test cases along with the use cases in requirements specification. This seems to be what executable requirements method advocates. For us, the immediate advantages would be to have concrete input – result chains based on the requirements changes that we could go over with the users. The level of abstraction that our use cases have would be removed.

The secondary consequences would be that we would have stakeholder-approved test cases straight from the requirements specification stage. Also, if and when the stakeholders would see the link, they could save time on acceptance test planning by using the generated tests as a baseline. In the future, it would be feasible to streamline the process so that the stakeholder-made acceptance tests would be a part of the requirements process and there would be no overlap.

It must be noted that the use cases for that particular system are probably more cryptic than would be optimal. They are more general than advised for use cases. We would have less trouble if each use case only covered a single way of using the system. However I think that executable requirements would be the way to go in 2009.

Moral of the story: From now on, regardless of the specifications format chosen, I intend to include making acceptance test cases as part of requirements specification.

Discussion of Game Engines suitable for Serious Games Development

Serious Games Source has published a feature Serious Game Engine Shootout by Richard Carey. It discusses the best engines for building serious games. The notion of game engine for serious games used in the feature is based on the general notion of a game engine (Wikipedia) and enhanced with the specific requirements for serious games; e.g. player behaviour and action recording and possible integration with external systems. The feature discusses five game engines built specifically for serious games: Breakaway’s mōsbē, Numedeon’s NICE, Forterra’s OLIVE, Muzzy Lane’s SIGMA, and Virtual Heroes’ Unreal3 Advanced Learning Technology as well as commercial game engines such as Gambry, Quazal’s Eterna, Torque, Multiverse, Unity, Neverwinter Nights and Second Life. The feature gives basic information on all the engines as well as a short review.

Sun’s Darkstar Online Game Platform to be Published as Open Source

As reported by Raph Koster: Sun’s flexible online game platform, Project Darkstar, is going to be released as open source. Early release binaries are already available and the source code for version 1 will be released in May. The platform seems to have potential; it is reported that you can implement clients easily with the technology that you prefer and even the server-side is not restricted to Java. From a quick look the main platform seems very well thought out and flexible so that it supports the development of many kinds of games.

Gamasutra: Automated Test Systems

Mark Cooke of Nihilistic Software has written an article on build and test systems for console games for Gamasutra. The article in itself is fairly interesting. What is more important is the basic premise: game development project should have tools and processes of making builds and especially testing the game software. Automated builds with unit tests are a good way of making sure that the code is and stays free of errors during the course of development. The next project in AGL in my opinion should peruse test-driven development as a light-weight practice to test its feasibility in game development. Here’s another article that shows some promise.